# File streaming

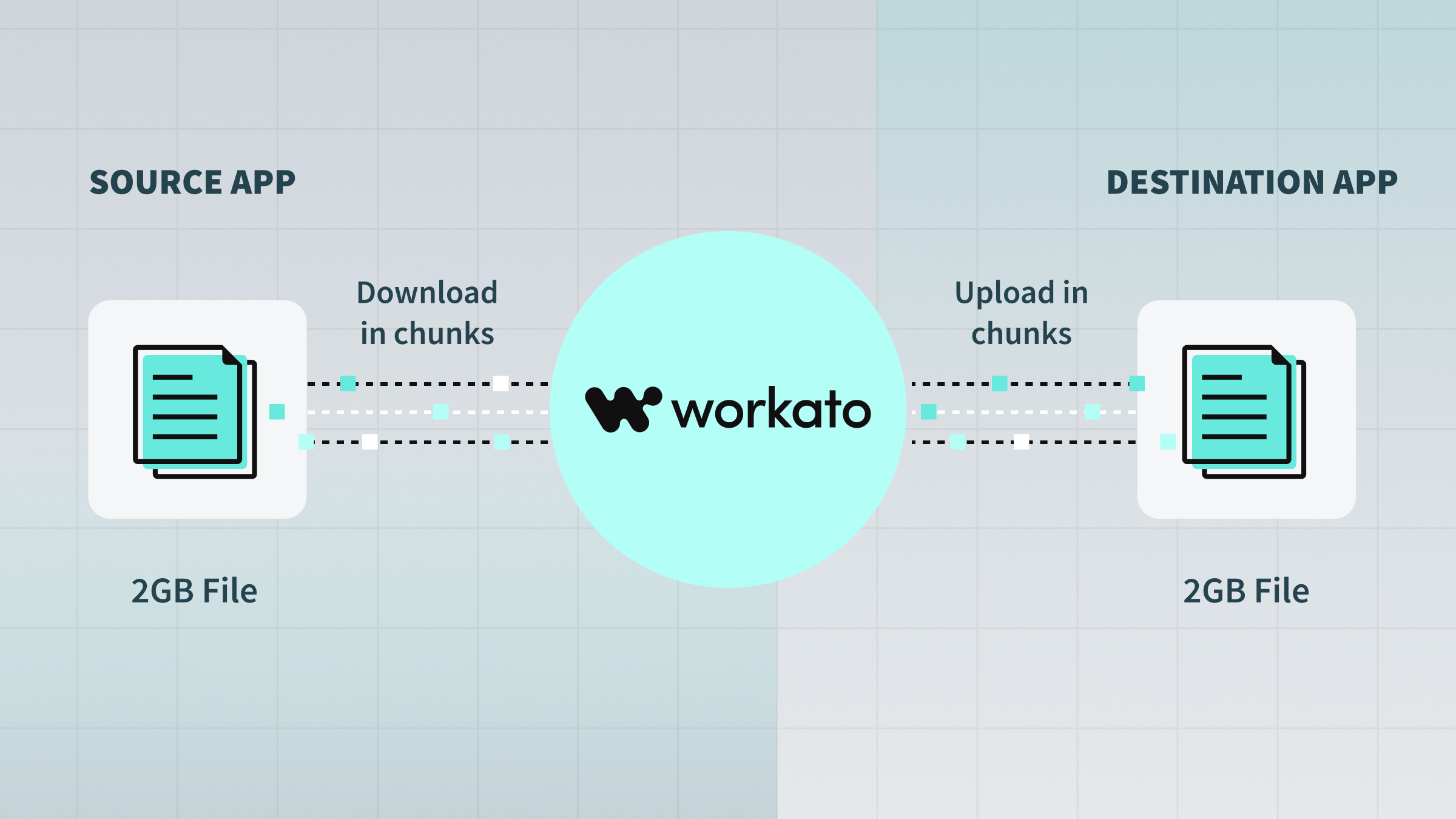

File streaming is a technique used to process a large file by dividing it into smaller pieces, transferring one piece at a time, and then putting the pieces back in order.

For example, when Workato uses file streaming to transfer a file between systems, each piece is downloaded from the source and uploaded to the destination in sequence.

File streaming is useful when processing a large file is impractical or impossible due to size or memory limits at the source or destination app. With file streaming, you can stay within each app's constraints because Workato processes smaller chunks one at a time. This makes it possible for Workato to transfer files of any size between apps that support streaming. Workato also supports file streaming for large volume storage and data transformations.

The following diagram illustrates how file streaming works:

How file streaming works

How file streaming works

# Use cases

You can use Workato's file streaming for use cases such as the following:

- Send data in bulk to applications

- Receive data from applications using bulk triggers or bulk actions

- Store large files in Workato FileStorage or other file systems such as SFTP and S3

- Send or receive data from SQL Transformations

For example, with file streaming, you can:

- Extract a file with 1 million rows of leads data from an on-prem file server and load it into a Google BigQuery table

- Accumulate 100,000 rows from a non-streaming source such as HubSpot to Workato FileStorage using batch actions, and then stream the rows from FileStorage to Salesforce bulk APIs all at once

- Extract new or updated contact records from Marketo using bulk triggers and aggregate the records with other data sources in SQL Transformations

# Comparison with batch processing

Batch processing is when multiple jobs each process multiple records. The number of records that can be processed in a single job is determined by the batch size. Workato creates as many jobs as needed to process all relevant records, which makes it possible to process very large files. However, due to batch size and memory limits, this approach can result in a high volume of API calls, and it does not scale well as the volume of data increases.

In contrast, file streaming uses a single job to process all relevant records in a single call to a streaming API, chunk by chunk. This results in fewer API calls and faster processing, even when the file is very large.

Batch triggers and actions should typically be paired with other batch actions. Likewise, streaming-compatible data producers (such as file downloads, bulk triggers, and bulk export actions) must be paired with streaming-compatible data consumers (such as file uploads and bulk create, bulk upsert, and bulk import actions).

We recommend using triggers and actions that support file streaming whenever possible to help:

- Simplify recipe design

- Reduce task count

- Reduce the number of API calls made between sources and destinations

# How to use file streaming

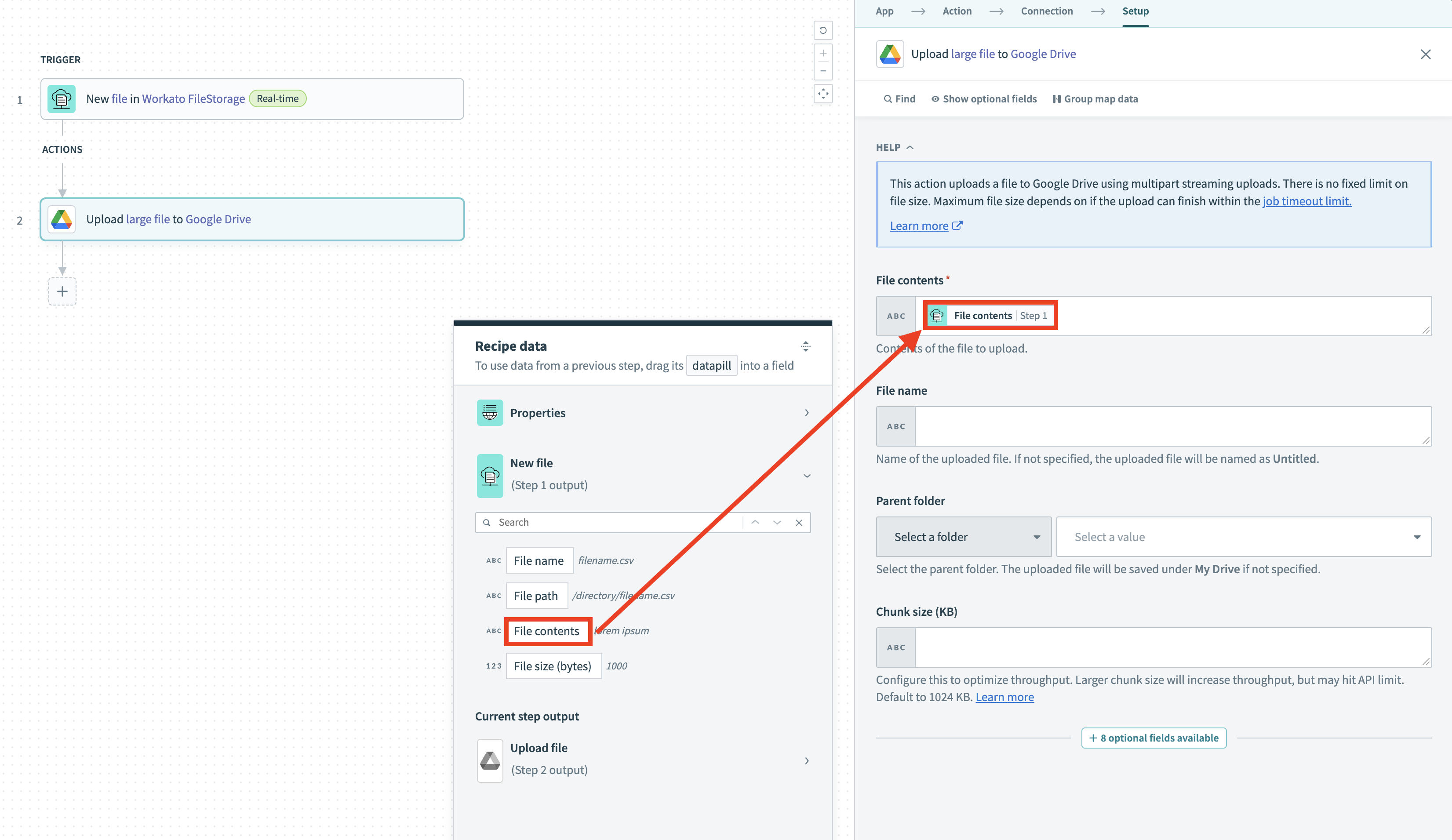

If either the source (such as a file download, bulk trigger, or bulk action) or the destination (such as a file upload or bulk action) supports file streaming, Workato automatically initiates file streaming when you pass a File contents or CSV contents datapill to the File contents input field. No additional configuration is required in the recipe.

Passing a File contents datapill to a File contents input field

Passing a File contents datapill to a File contents input field

Note that the streaming transfer begins when the recipe reaches the consumer action step in the recipe. File streaming actions are usually long or deferred actions. As a result, streaming actions do not time out, no matter how long the transfer takes.

# File connector triggers and actions that support file streaming

The following table lists all file connector triggers and actions that support file streaming:

| Connector name | Triggers and actions that support file streaming |

|---|---|

| Amazon S3 |

|

| Azure Blob Storage |

|

| BIM360 |

|

| Box |

|

| Dropbox |

|

| Egnyte |

|

| FileStorage |

|

| Files by Workato | Get file from URL |

| FTP |

|

| Google Cloud Storage |

|

| Google Drive |

|

| OneDrive |

|

| On-prem |

|

| Percolate |

|

| SFTP |

|

| SharePoint |

|

BUILD CUSTOM CONNECTORS

To learn how to build custom connectors that use file streaming, refer to the Connector SDK file streaming guide.

SEE FILE STREAMING IN ACTION

View a sample recipe (opens new window) that uses file streaming to transfer files from an on-prem file system to Amazon S3.

Last updated: 4/10/2024, 5:14:42 PM