# Example Change Data Capture (CDC) recipes

This guide provides examples of different recipe design patterns you can use for CDC (Change Data Capture). These examples demonstrate how you can extract data in bulk and in batches from the following sources:

- SaaS

- Databases

- File systems

- On-premise systems

# SaaS

# Batch extraction from Salesforce

This recipe demonstrates how you can use batch triggers for CDC. This recipe extracts new/updated records in batches from a source system (Salesforce) and loads it into a data warehouse (Snowflake).

Batch extraction from Salesforce

Batch extraction from Salesforce

# Recipe walkthrough

Configure the New/updated records in Salesforce batch trigger. This trigger monitors Salesforce for changes. The trigger exports the records in batch when records are added or updated.

Upsert the data extracted from Salesforce into Snowflake using the Upsert batch of rows action.

# Databases

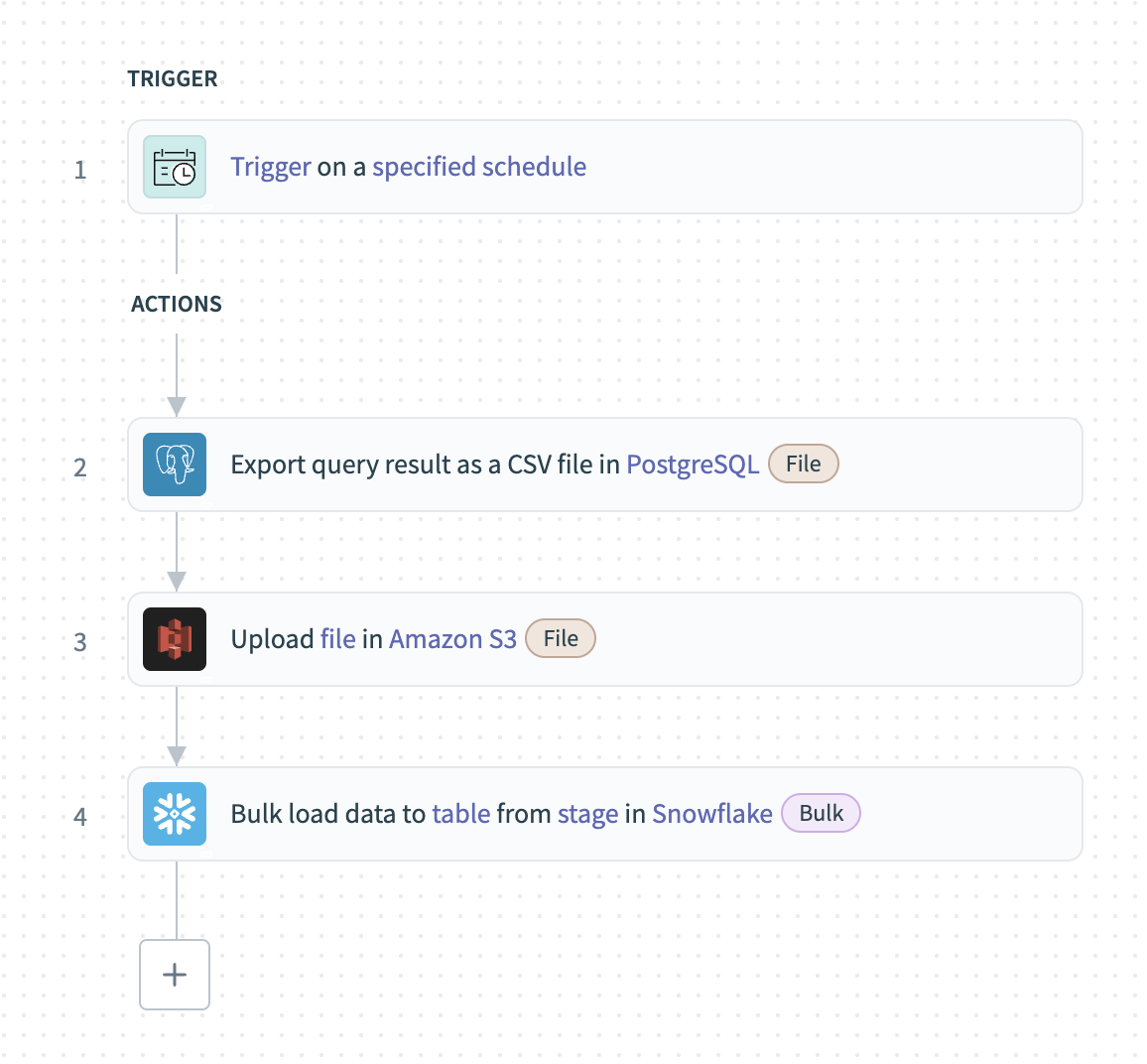

# Scheduled bulk extraction from a database

This example demonstrates how to extract data from databases in bulk. This recipe extracts data from PostgreSQL and loads it into Snowflake.

Bulk extraction from PostgreSQL

Bulk extraction from PostgreSQL

# Supported databases

The following database connectors support bulk actions:

- Snowflake

- PostgreSQL

- MySQL

# Recipe walkthrough

Set up a Scheduler trigger and determine the frequency of bulk extractions.

Export data in bulk using the Export query result from PostgreSQL action.

Upload the file to an external staging location such as an S2 bucket using the AWS S3 Upload file action.

Load the exported data into a table in Snowflake using the Bulk load to table from stage action.

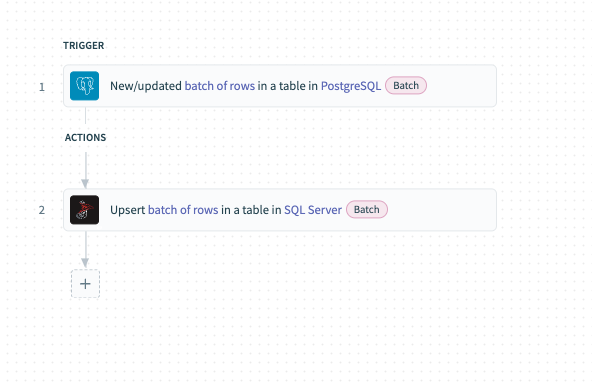

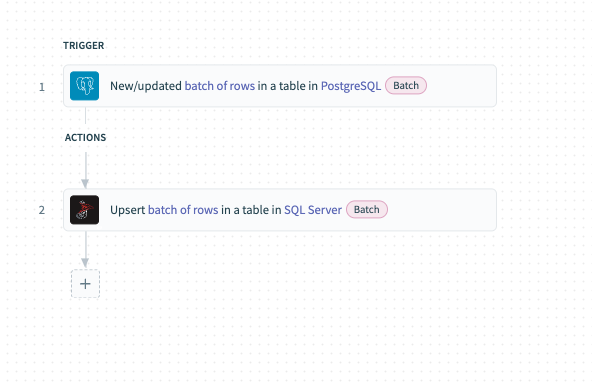

# Batch extraction from a database

This example demonstrates how to extract data from databases in batches and load them to a destination.

# Supported databases

All of Workato's database connectors support batch extraction, including:

- Snowflake

- SQL Server

- PostgreSQL

- Oracle

- MySQL

- Redshift

# Recipe walkthrough

Export new/updated records in batches from PostgreSQL using the New/updated rows in PostgreSQL trigger.

Upsert the exported rows into your data warehouse such as SQL server using the Upsert batch of rows in SQL server action.

# File systems

File connectors in Workato enable users to extract data from file systems using download actions that are compatible with streaming. Pairing this action with a downstream system that is compatible with bulk loads facilitates data extraction from file systems and loading into databases or data warehouses.

You can also pair download actions with bulk actions in app connectors to extract data from file systems and send your data to applications as a whole.

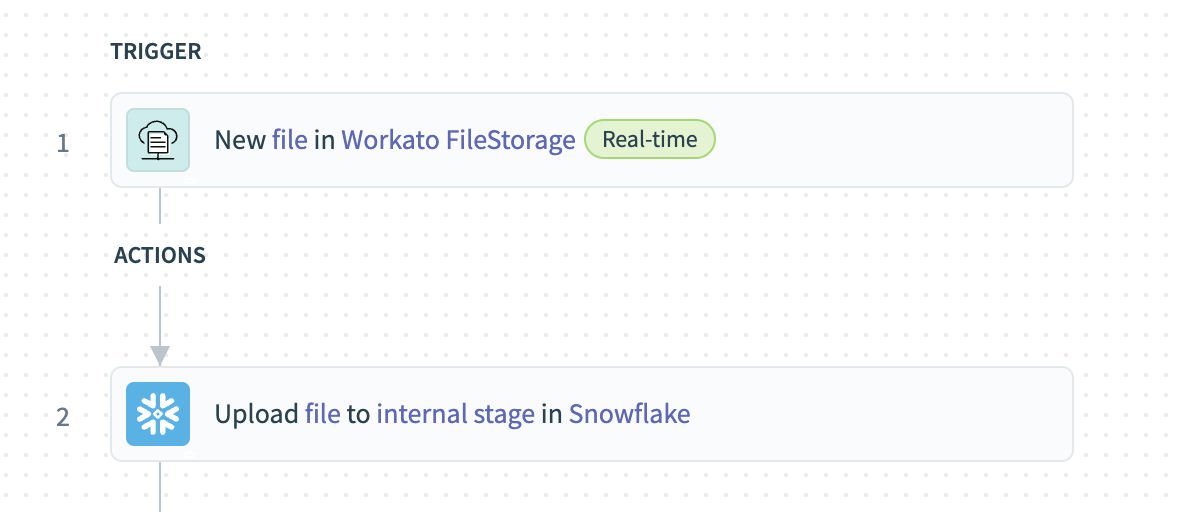

# Bulk extract from FileStorage and load to Snowflake

This example demonstrates how to load aggregated data in Workato FileStorage into a data table in a data warehouse (Snowflake).

Extract data in bulk from FileStorage and load to Snowflake

Extract data in bulk from FileStorage and load to Snowflake

# Recipe walkthrough

Use the New file in Workato FileStorage trigger to monitor a specific directory for new files. A new recipe job begins when files are added to the directory.

Upload the files in bulk to Snowflake using the Upload file to internal stage action.

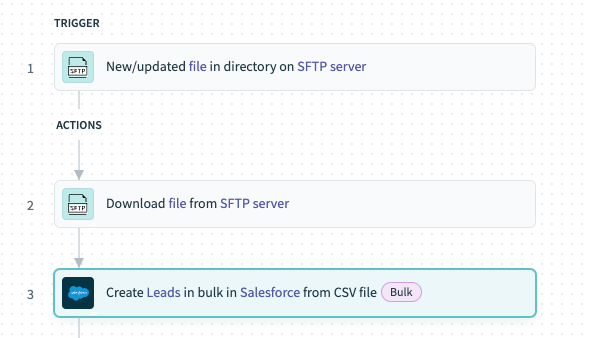

# Bulk extract data from SFTP to Salesforce

This example demonstrates how to sync leads data in SFTP into a cloud CRM system (Salesforce).

Extract data in bulk from SFTP and load to Salesforce

Extract data in bulk from SFTP and load to Salesforce

# Supported connectors

The following connectors support bulk downloads.

All file connectors:

- On-prem files

- Workato FileStorage

- SFTP

- FTP/FTPS

- Google Drive

- Microsoft OneDrive

- Microsoft Sharepoint

- Box

- Dropbox

- BIM 360

- Egnyte

All data lake connectors:

- AWS S3

- Azure Blob Storage

- Google Cloud Storage

# Recipe walkthrough

Use the New file trigger to monitor SFTP for new files. A new recipe job begins when new files are detected in the partner server.

Use the Download file from SFTP server action to fetch the file contents and stream it to the next action.

Use the Create leads in bulk in Salesforce action to consume the streamed bulk data, load it into Salesforce, and create leads.

Last updated: 4/23/2024, 3:50:22 PM